libxm, the demoscene, and softsynth considerations

It's no secret, I love the demoscene and I would love to make my own prod someday. So far, most of my work has been on the audio side of things. (I also ended up falling in love with the JACK API, but that's another issue.) The usual approach, when making a demo, is to get the audio sorted out first. It's a lot easier to organise visuals around sound, than the other way around.

Why not reuse existing stuff?

- It's rarely libre;

- It rarely was designed with GNU/Linux in mind;

- Where's the fun in that?

Here comes libxm

That's one of the reasons why I wrote libxm in the first place. I couldn't find a libre, small library to play back FastTracker 2 XM files (there's probably many, I just did not look hard enough). The plan was to write such a library, compose a XM module then embed the whole thing in a small executable.

The libxm idea was a mixed success. It works, and the library

compresses down to about 10KiB of machine code. (For example: the

xmtoau linux binary, packed, is about 9.6KiB in size.)

I also used libxm, for fun, in non-demo related projects. Most notably, libxm.js (emscripten port of libxm, with cool visuals) and xmgl (cool visuals as well, but with real OpenGL and JACK).

Possible libxm improvements

Per-effect granularity

Considering that, in a real prod, you don't actually need to implement

all the effects, you can also shave off some code there. One way of

doing so would be to write a "pre-preprocessor" for libxm, which

analyzes a .xm file, lists its effects, and generates a header file

which is then included when compiling libxm. Said header file would

#define which features/effects are needed or not needed. It is a lot

of work (and a ton of #ifdefs to add in the playback routine, with

all the added complexity of maintaining code like that) and the gains,

in terms of code size, aren't clear.

Unsafe loading

Another way to save space (which is somewhat implemented in libxm) is,

because you're only loading one known module, to disregard all safety

concerns about input data and comment out all the sanity checks. In

libxm, this is done by playing with the value of XM_DEFENSIVE. It's

kind of useless (in terms of saving space) at the moment, but I could

go a lot more in-depth with it.

Don't load at all

Another way to skip all the loading code is to just dump, in the

binary, the internal structure of the libxm context once the module

has been loaded. This might save quite some space and may allow for

better compressibility compared to embedding the .xm file as-is.

Even though the libxm context structure is quite simple (I went out of

the way to only do one malloc() call for the whole context), it

contains arrays, pointers, strings, you name it. It's not as simple as

writing it, then reading it and casting it to a xm_context

pointer. So some amount of loading code would still be needed.

Programatically-generated samples

On complex .xm files, the bulk of the space is taken by the

samples. For complex samples, it could be more space-efficient to

write the code to generate the waveform. At that point though, why not

just give up and write a real softsynth.

XM-using demos

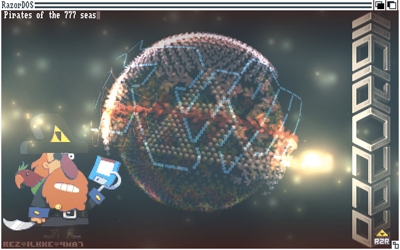

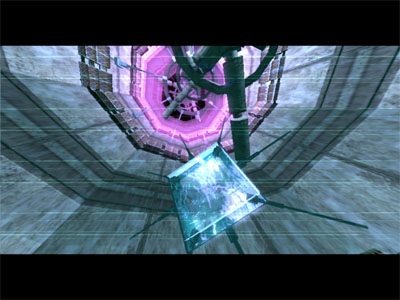

Some demos, made by rez, that use .xm modules for their sound :

It seems rez's later prods use the V2 softsynth. Sadly the core of the project (the synthesizer itself) is not libre.

Softsynths are complex

A few days ago, I listened (thanks to Nectarine radio) to the soundtrack of Memories from the MCP, a 64k demo by Brain Control. And I was really impressed by the audio. Of course, that's due mostly to the interesting melodies and variation, but the synths themselves were also sounding particularly rich and interesting.

It turns out the softsynth used for this demo is Tunefish, which is libre and also fits in about 10KiB of compressed code. It is also possible to use it on GNU/Linux, but as a VST plugin.

I want to do something similar, but using LV2. Unfortunately, while I do know my way around modules and their internals, I have no idea of how a softsynth works. Is it like a "real" synth like the DX7, which uses frequency modulation? Of course, I know what a Fourier transform is and I have a basic understanding in the field of signal processing. Is that enough to create an actual piece of software good enough for a 64k prod?

To my understanding, a softsynth has two "sides":

The "editing side", where it typically acts as a plugin for some kind of DAW. That's where you compose the song, fiddle with the knobs to make the instruments until it sounds good.

This step is probably the most annoying, because you have to deal with LV2 internals, with the joy that is MIDI, and creating a fancy GUI. A way to extract the data (when "rendering" the song in the DAW) to some compact format is also a challenge (how to deal with multiple instruments? with ticks that tweak the values of some effect in real time?).

The "playing side", where you play back some form of data (preset instruments and patterns). In this side, compressed machine code (and data) size matters.

This is the part you actually embed in the demo. Static linking, or even direct inclusion of the source file is the way to go there.

I will probably start by creating something very simple, to get the general idea of it and to also learn from my mistakes. I'll either have to get to like Ardour for composing, or find some compatible tracker interface; jacker looks promising.